Every so often we have clients requesting an update to their website based on some website grader or another. Now, this isn’t a problem in itself—such tools make it easier to improve websites. It does become a problem when you pursue a perfect score on these sites as an end in itself.

What I’d like to assert is that site evaluation tools, even and perhaps especially when combined, cannot recommend a perfect website. We could spend days, even weeks, implementing their recommendations—and racking up higher costs than you’d need to build a website from scratch—but it wouldn't guarantee traffic, conversions, or satisfied users. Instead, you should focus on users' experience of the website.

To illustrate this better, I looked into website grades and overall site performance in a few select websites. By comparing them in these aspects, we can see how much of a bearing website grades really have.

The Scorecards

Page speed is often prioritised over other indicators because of the impact it can have on user behaviour—users are likely to abandon sites over even just fractions of a second. And because an abandoned page cannot be redeemed by any of its other qualities, page speed takes precedence.

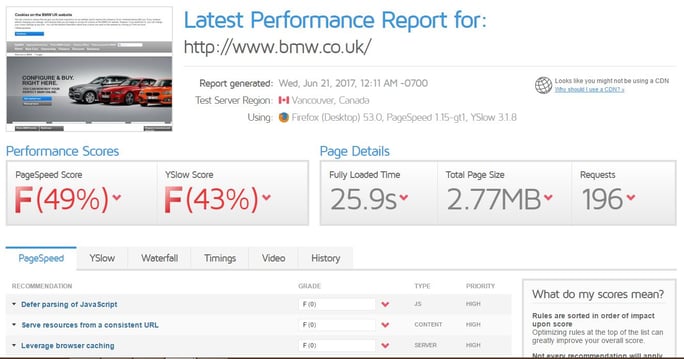

The results from website evaluation tools don’t always align with the way humans experience websites, though. For example, let’s consider the statistics on two car rental companies based in the UK. First up is BMW—here are their scores on website grader GTMetrix and their site’s traffic overview:

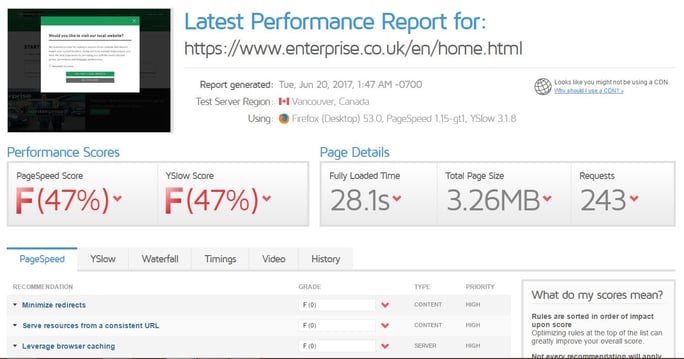

And here are the same results for Enterprise:

Neither website’s score is what you’d call satisfactory, but both draw in a lot of monthly traffic. BMW’s overall traffic is slightly higher, but so are the number of bounces. It’s possible that this is due to the site’s lower pagespeed. The test results provide something worth thinking about, but aren’t conclusive on their own—and would hardly suggest the overall success the two sites have in terms of scoring visits.

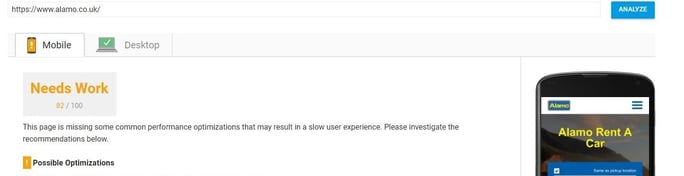

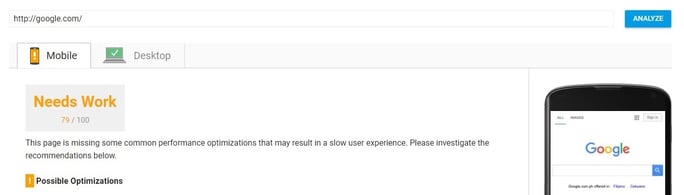

The next comparison uses Google’s PageSpeed report. In this setup, we compared another car rental company, Alamo, but matched it up with none other than Google’s own search engine—what better specimen than a the company’s own flagship site, after all?

According to the PageSpeed tool, Alamo’s mobile website “Needs Work” with a score of 82/100. But Google’s* fares no better, with 79/100. A Google tool thinks their website “Needs Work”—but that doesn’t stop it from being one of the most visited sites of all.

*Google has since added a Captcha layer to its website. The PageSpeed tool assesses the speed of this verification test, rather than the page itself, so that testing the site now yields a higher score.

Grade Breakdown

So the score doesn’t tell all. How do we account for this?

These tools analyse specific aspects of a page. Google PageSpeed, for instance, can assess the features of your page, but can’t cover the context in which users access it. User experience might be affected by the user’s location and the status of their network connection.

They use the same criteria for different kinds of pages. A static webpage would, obviously, load faster than one with dynamic functions—but in this day and age, it would be absurd to have a site made entirely of static pages. An evaluation tool can’t tell you exactly how to design each page.

User experience is affected by several factors outside the website itself. For instance: What do users need to accomplish? What are their alternatives? What might motivate them to stick with your site?

Take the case of BMW: their site has a leg up thanks to an established brand, strong offline marketing strategies, and the quality of services they provide—especially, perhaps, when compared to competitors.

Keep in mind that what brings users back to a site, ultimately, is satisfaction with it. If they get what they’re there for, with minimal difficulty, then they’ll be back—and that’s a sign your site’s performing well. Tools can help you save time keeping track of best practices but their results should always be taken in context.

In sum, yes, we can use website graders to evaluate websites. But solely focusing on these tools isn’t practical. It even runs the risk of breaking user experience—and that experience should remain the number one priority.

If you've recently gotten your website assessed, we'd be glad to discuss the results with you—and answer any other questions you might have. Just let us know when:

Comments